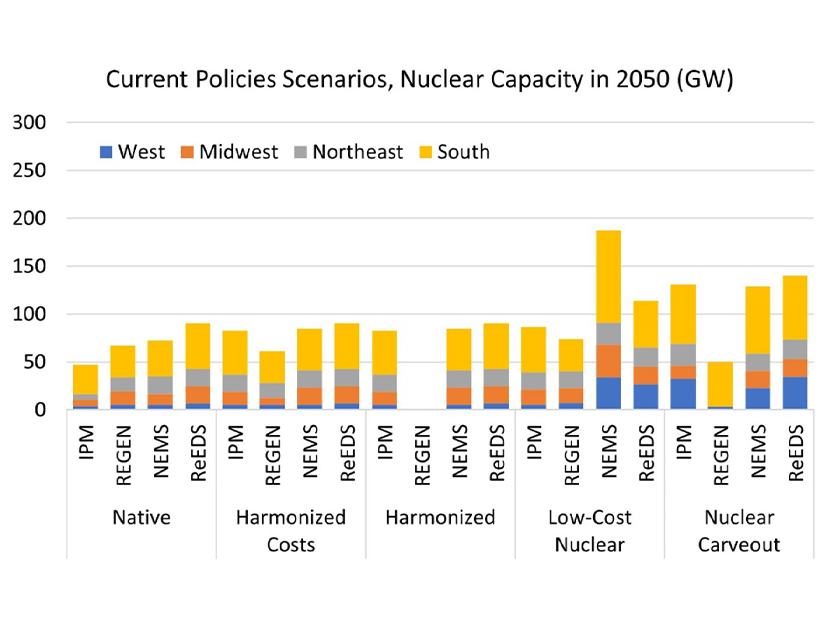

Depending on which high-level computer model is used to map out the role of nuclear in decarbonizing the U.S. power system, by 2050 the country could have anywhere from 2 to 329 GW of nuclear power in its generation mix, according to a new report from the Electric Power Research Institute (EPRI).

Such broad variability shows the inherent weakness of such widely used models — most of which have been developed by federal agencies — and the assumptions embedded in them. But the report also finds strong commonalities across the models, such as the critical role decarbonization policy, cost and regional economies may play in nuclear deployment. For example, the models all suggest that regions with strong decarbonization policies, but low wind or solar resources, will tend to have more nuclear, the report says.

Similarly, lowering costs — from $5,000/kW to $2,000/kW — will be a key factor in increasing nuclear capacity on the grid. Lower costs, plus a zero-carbon policy, pushes 2050 capacity to the high end of the range: 285 to 329 GW.

EPRI CEO Arshad Mansoor underlined the integral and intertwined role nuclear power and computer modeling must play in the energy planning needed to reach President Biden’s goal of decarbonizing the U.S. grid by 2035, especially for federal agencies.

The models in the study include EPA’s Integrated Planning Model, the Energy Information Administration’s National Energy Modeling System and the National Renewable Energy Laboratory’s Regional Energy Deployment System, as well as EPRI’s Regional Economy, Greenhouse Gas and Energy model.

Benchmarking and coordinating across these models will be critical to advancing nuclear power in the next decade, Mansoor said at a launch event for the report on Thursday in D.C. “We have to make sure there is an operational [small modular reactor]. We have to make sure there is an operational [next-generation] non-advanced lightweight water reactor. We have to make sure that the [Defense Department’s] Alaska project for microreactors actually works,” he said.

With 100% carbon-free policies, the U.S. would maintain its existing nuclear plants, with cost again driving rapid growth, across all models. | EPRI

With 100% carbon-free policies, the U.S. would maintain its existing nuclear plants, with cost again driving rapid growth, across all models. | EPRI

Without solid progress, Mansoor said, the current surge of interest in nuclear “will fizz out.”

Echoing Mansoor’s urgency, Alice Caponiti, deputy assistant secretary for reactor fleet and advanced reactor deployment at the Department of Energy, said, “It is essential that we have the ability to accurately model and communicate the benefits of nuclear energy, and therefore it’s critically important that we understand how these models account for nuclear energy production and where they have limitations and gaps.

“We need to ensure that planning tools are not biased by poor or outdated assumptions or by limitations in the tools, such as the ability of nuclear to operate flexibly or to assume that a plant is shut down at the end of its license,” Caponiti said.

The data that go into these models are therefore critical, said Brent Dixon, national technical director for nuclear systems analysis and integration at the Idaho National Laboratory. For example, with no new advanced reactors yet built, current models cannot accurately project how costs may go down over time, Dixon said.

Assumptions based on algorithms may produce different and less accurate results than actual data from the field, he said. “We need to look closer to find out why there is a difference between the data and what these algorithms predict.”

Angelina LaRose, assistant administrator for energy analysis at the EIA, agreed, noting that computer models are frequently wrong, but “the goal is to make them wrong in a useful way … so policymakers can identify attractive points of leverage, potential pitfalls and unintended impacts, both beneficial and detrimental, and approaches to achieve whatever policy goal they have in mind.”

Clean, Firm Electrons

Nuclear power now accounts for 20% of all electricity — and 50% of all carbon-free power — generated in the U.S. The need to keep the current fleet online into the next decades, beyond the existing licenses of individual plants, is one point of agreement across models in the study.

The public is still divided on nuclear, with a recent poll from the Pew Research Center showing 35% of those surveyed in favor of federal support for nuclear versus 26% against and 37% neutral. But as increasing amounts of solar and wind have come onto the grid, nuclear has become a focus in the industry as a source of clean, firm, dispatchable power, Dixon said.

“All electrons are no longer the same,” he said. “Some are worth a lot more, and those are the electrons that are clean, firm electrons, and that’s your area for nuclear to compete in as we go forward.”

The study tests the different models across a set of policy, economic and technical variables — what analysts call “sensitivities” — beginning with a “native” scenario based on existing policies and regulations, plus scenarios aimed at reducing greenhouse gas emissions by either 80 or 100% by 2050. The models were also run with “harmonized” cost and technology assumptions, a low-cost scenario, and one assuming regulations providing a nuclear “carveout” that would increase nuclear on the grid over time.

The variability of results can be dramatic. With federal policy pushing an 80% carbon reduction by 2050, EPA’s model provides mostly conservative estimates of nuclear growth ― below 100 GW ― except with a nuclear carveout. The EIA and NREL models, on the other hand, show a low-cost scenario with 250 GW of nuclear, but only 150 GW with a nuclear carveout.

The results of these different scenarios come with a big caveat: The technology and policy assumptions used in the study “do not reflect policy or market expectations of the modelers or their respective organizations” and are not intended as a “policy development exercise.” The modeling for the study was also completed before passage of the Infrastructure Investment and Jobs Act, so the law’s incentives for nuclear and other clean energy technologies were not factored into the scenarios.

But the report repeatedly shows that differences in results also provide major insights. For example, models using different “temporal resolutions” — that is, how many time units are factored into a scenario — demonstrate that simplified resolutions based on seasonal averages or the levelized cost of energy “tend to understate the value of broader technological portfolios … and can overstate the value of solar generation,” the report says. “The need for dispatchable, firm capacity is clearer with higher temporal resolution across all policy scenarios.”

In a recorded video message, Kathryn Huff, DOE assistant secretary of nuclear energy, pointed to the study’s work on temporal resolution as one of its significant advances. “Unless you accurately capture the two-minute or two-hour sorts of time scales on which our energy system has to balance, you may not get a realistic understanding of what our grid needs to look like,” she said.

Going forward, Mansoor said that models also must be able to incorporate the different values of nuclear, as a provider of inertia or for energy security in volatile markets. “We need an integrated model that values not just nuclear as an electricity provider but also as a tool to help industries to decarbonize,” he said.