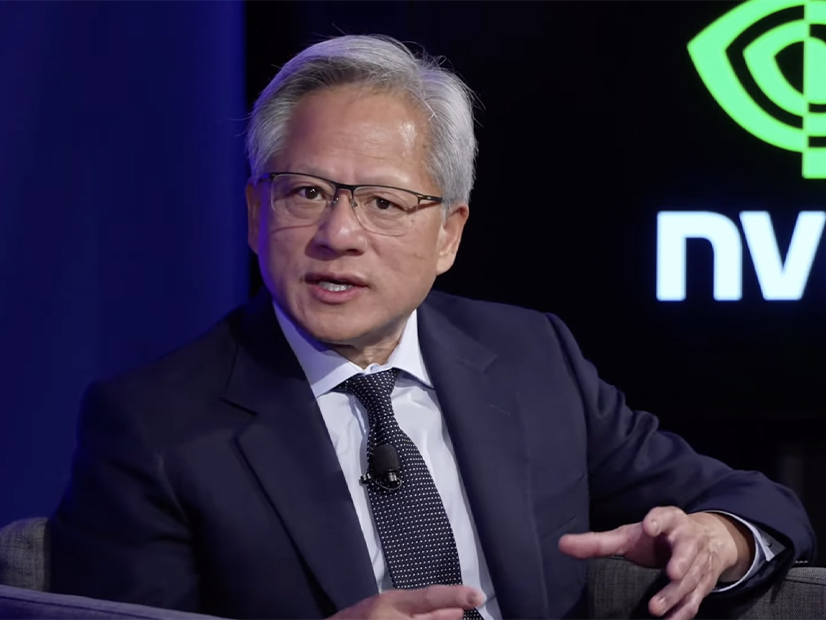

As new data centers built for artificial intelligence continually increase the demand for electricity in the U.S., one of the leaders in the field, Nvidia, is touting AI’s ability to increase the efficiency of the grid, as CEO Jensen Huang discussed at the Bipartisan Policy Center on Sept. 27.

In explaining why AI demands so much power, Huang recounted the history of Nvidia and how its approach to computer processing can be applied to the grid.

The company makes the chips, systems and software that have led to the AI boom, but before that became mainstream, it was best known in the video game industry for manufacturing one of the two leading lines of graphics processing units (GPUs) — the GeForce — large chips that can be added to a computer to help it process the now extremely detailed models and 3D images in games.

The standard design for most computers dates back to 1964, called the “IBM system,” which uses a central processing unit (CPU), multitasking, and the separation of hardware and software by an operating system. That basic “general purpose computing” design still is used today, though with massive improvements, Huang said. Around 1993, as video game developers began transitioning from 2D to 3D graphics, Huang and his colleagues realized some problems are so specialized a general-purpose approach does not work well.

“Physics simulations and data processing and computer graphics … image processing — these problems have algorithms inside that are very computationally intensive,” Huang said. “And if we could take that and run it on a specialized processor, on a specialized computer, we could add a chip to the computer that makes it go 100 times faster.”

GPUs focus on those specialized tasks, while the main CPU is reserved for more general tasks. That opened up efficiencies in computing, which let the technology tackle new and more difficult tasks as video game graphics and physics became more advanced. The GeForce still is going strong for gaming PCs and also is used by Nintendo’s Switch console, Huang noted.

“Then one day, artificial intelligence found us, and so accelerated computing … was an observation about the future of computing that turned out to be right,” Huang said.

Queries of artificial intelligence use more energy than traditional internet searches, and it takes significant energy for an AI network to “learn.”

“The reason why it consumes a lot of energy is that the artificial intelligence network, through trial and error, is trying to figure out how to predict something, and it’s recognizing patterns and relationships among tons and tons of information,” Huang said.

Eventually, AI networks comb the datasets they are trained on enough so they understand them and can make predictions based on them. “These data centers could consume, today, maybe 100 MW,” Huang said. “And in the future, it’ll probably be … 10 times, 20 times more than that.”

Those massive loads do not have to be built in one place, Huang said. Data centers can be built where energy supplies are plentiful. (See Industry Considers Building its Own Generation to Decarbonize.)

“There are places in the world where we have excess energy,” Huang said. “It’s not necessarily connected to the grid. It’s hard to transport that energy to population, but we can build a data center near where there’s excess energy and use the energy there.”

Siting new data centers in energy-rich areas is one way of getting around the issue of interconnecting resources to the grid and transmitting energy to population centers, Huang said.

But the promise of AI could lead to more efficient use of energy in other applications, with Huang pointing to work Nvidia is doing around weather forecasting that will make that process much more efficient compared to the super computers used now.

Making the grid smarter is another application for AI that could help save significant energy, he said. AI could help integrate sustainable energy, operate two-way vehicle charging and find faults on the grid so they can be fixed before they lead to a reliability lapse.

The growth in data centers has given a shot in the arm to nuclear power, with Constellation Energy recently announcing a deal with Microsoft that will reopen the recently retired reactor at Three Mile Island. (See Constellation to Reopen, Rename Three Mile Island Unit 1.)

“Nuclear is going to be a vital, integral part of this,” Huang said. “No one energy source will be sufficient for the world, and so we’ll have to find that balance.”

Efficiency has fueled Nvidia’s success, with its approach using far less energy for complex tasks than standard, general-purpose computing, he added. Efficiency is going to be key to meeting all the new demand going forward too.

“I would really love to see our power grid be smart today,” Huang said. “Our nation’s power grid was built a long time ago because we’re one of the earliest countries to become prosperous, and that power grid could benefit from the insertion of artificial intelligence and smart technology into it. And that smart grid would … help us properly provision technology to the right places.”

A Constellation executive asked Huang whether he agreed with some who have argued that new data centers should add clean power to the grid, as opposed to using what already is available for their purposes. The largest nuclear plant owner, in addition to reopening Three Mile Island, is interested in co-locating data centers with plants that still are in operation, which FERC and other regulators are examining. (See Talen Energy Deal with Data Center Leads to Cost Shifting Debate at FERC.)

Huang answered that, having met with the Biden administration multiple times, the current policy is to allow U.S. companies to build as many data centers domestically as they can, and the administration is interested in helping the sector with permitting and connecting to the grid to make that possible.

“Building the AI infrastructure of our country is a vital national interest,” Huang said. “And although it consumes energy to train the models, the models that are created will do the work much more energy efficiently. And so, when you think about the longitudinal lifespan of an AI, the energy efficiency and the productivity gains that we’ll get from it, from an industry, from our society is going to be incredible.”

AI is one source of demand that does not require 24/7 reliable power, he added. The processes can be shut down for 5% of the year when demand is peaking elsewhere on the grid and then come back to what was being worked on as other users drop off the grid.