A new study from Duke University says the existing power system could handle 126 GW of new demand with no additional generation if artificial intelligence data centers can be persuaded to cut their energy use by as little as 1% during times of peak demand.

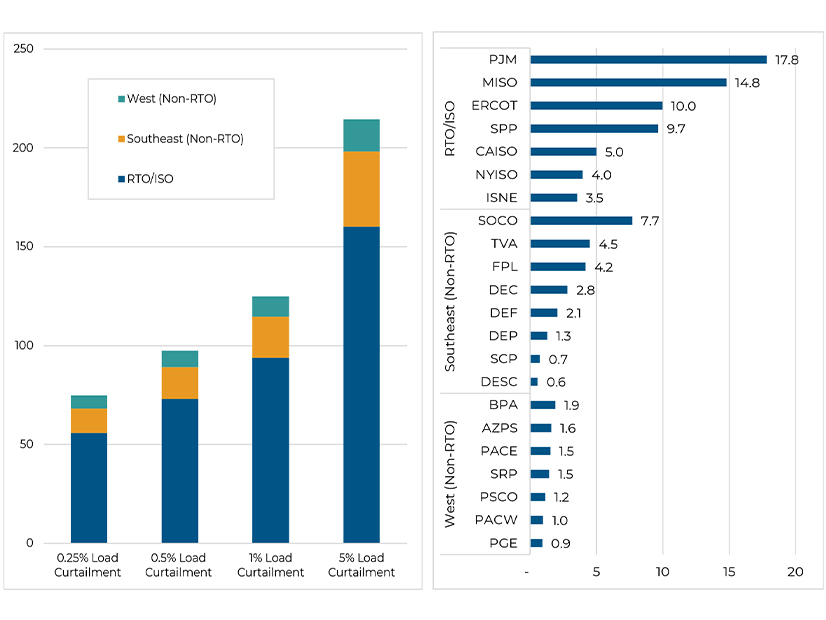

The “Rethinking Load Growth” report looks at 22 balancing authorities — RTOs, ISOs and large utilities — representing 95% of the country’s peak load and finds that each could add varying amounts of new load without exceeding its maximum capacity “provided the new load can be temporarily curtailed as needed.”

The report defines system curtailment, or flexibility, as a data center’s ability to temporarily reduce its power consumption “by using onsite generators, shifting workload to other facilities or reducing operations,” thus creating “curtailment-enabled headroom” to add new load.

For example, the study estimates PJM could integrate more than 23 GW of new load with curtailment-enabled headroom based on 1% curtailment. ERCOT could add 14.7 GW, and Southern Co. could add 9.3 GW.

Lower curtailment rates still could provide significant headroom, the study says, with PJM opening up 17.8 GW at 0.5% curtailment and 13.3 GW at 0.25% curtailment.

The length of curtailment periods also would vary, with a 1% curtailment lasting no more than 2.5 hours, while a 0.25% curtailment rate would last only 1.7 hours.

“These results suggest that the U.S. power system’s existing headroom … is sufficient to accommodate significant constant new loads, provided such loads can be safely scaled back during some hours of the year,” the report says, framing flexibility as a win-win for all stakeholders.

The U.S. still will need to build new generation and transmission to meet anticipated demand growth, the report says. “[But] flexible load strategies can help tap existing headroom to more quickly integrate new loads, reduce the cost of capacity expansion and enable greater focus on the highest-value investments in the electric power system.”

“The immensity of the challenge underscores the importance of deploying every available tool, especially those that can more swiftly, affordably and sustainably integrate large loads,” the report says. “The unique profile of AI data centers can facilitate more flexible operations, supported by ongoing advancements in distributed energy resources.”

Data Centers and DR

Authored by researchers at Duke’s Nicholas Institute for Energy, Environment and Sustainability, the study grounds its argument for flexibility in the current flashpoints for demand growth. Data centers often have aggressive schedules for going online but may face yearslong interconnection and supply chain delays.

Lead times for ordering transformers have gone from less than a year to two to five years, with prices rising 80%, according to June 2024 figures from the president’s National Infrastructure Advisory Council, the report says. Wood Mackenzie has reported that lead times for high-voltage circuit breakers were nearing three years at the end of 2023.

The report notes the growing interest in co-locating data centers with existing or new generation, but says it is not likely to be “a long-term, systemwide solution.”

The fact the U.S. grid is designed with headroom to accommodate relatively short periods of peak demand and often is underused provides a further rationale for leveraging this built-in flexibility, the report says. Better use of the system can reduce costs for consumers by “lowering the per-unit cost of electricity — and [reducing] the likelihood that expensive new peaking plants or network expansions may be needed.”

The report notes that some grid operators and utilities already are experimenting with flexible interconnection strategies, such as ERCOT’s interim treatment of new large loads as “controllable” resources, allowing them to go online in less than two years.

Still another argument for flexibility is the recent release of DeepSeek, the Chinese AI platform that claims to use significantly less energy than U.S. AI. Here, the report says, system flexibility could serve as a hedge for potential demand uncertainty.

But getting data centers to participate in traditional demand response programs — which have long provided system flexibility — has been difficult because of centers’ often inflexible, 24/7 demand profiles. Further, traditional DR programs have been designed for “traditional industrial consumers … with different incentives and operational specifications.” The report suggests new programs should be developed to align with data centers’ needs, including “streamlined participation structures, tailored incentives and metrics that reflect the scale and responsiveness of data centers.”

New AI data centers, with “evolving computational loads … are more amenable to load flexibility,” the report says. The “training” of AI databases allows for flexible timing and the distribution of workloads across different data centers. An EPRI report cited by the Duke researchers found that “optimizing data center computation and geographic location … to capitalize on lower electric rates during off-peak hours” could provide cost savings of 15% and reduce strain on the grid during high-demand hours.

The report points to three trends that could “create further opportunities for load flexibility now than in the past.” First is the construction and interconnection delays that increase costs and timelines for getting new centers online, followed by the growth of clean, distributed technologies that offer lower-cost, behind-the-meter generation.

The third is the growth of hyperscale data centers and their computational loads, “which is lending scale and specialization to more sophisticated data center operators,” the report says. “These operators, seeking speed to market, may be more likely to adopt flexibility in return for faster interconnection.”