WASHINGTON, D.C. – NARUC’s Winter Policy Summit focused on the main issue facing the power industry — how to reliably and affordably interconnect new large load customers.

Its annual meeting in November was held just after the U.S. Department of Energy filed an Advance Notice of Proposed Rulemaking (ANOPR) asking FERC to claim jurisdiction over large loads connecting to the transmission system. That led the state regulator group to issue a resolution seeking to preserve state jurisdiction over customer interconnection. (See Regulators Urge FERC to Honor State Authority over Large Load Interconnections.)

FERC is working its way through voluminous comments with DOE asking for action on the ANOPR by April 30. FERC Chair Laura Swett told state regulators its final action would be guided by the law.

“We are very committed to doing everything that we can within our jurisdiction under the law, but I take that very seriously,” Swett said Feb. 10. “There are some pretty clear lines drawn between federal and state jurisdiction, and some a little bit blurry because these are new issues. But I want you all to know that we are only going to act within the law as it has been designated by the courts.”

States have the important role of siting the generation that is needed to meet rising demand, which means the different levels have to work together to address the challenge, she said.

Swett has been on the job for four months, but plenty has happened in that time, including a major winter storm that stressed, but did not break, the bulk power system.

“My first takeaway is we cannot retire generation without replacing it,” Swett said. “So, because the grid is so tight and supply must meet demand head-to-head, we have to ensure that if we’re going to take large generation offline, then there has to be something to meet that — even maintain the status quo, which we’re already stressing.”

FERC recently approved new rules from PJM to more easily transfer capacity interconnection rights from retiring generators, which Swett said could be a model for the rest of the country. (See FERC Approves PJM CIR Transfer Proposal.)

While the grid is stressed, its operators performed well during the recent storm, avoiding any resource adequacy related outages despite the tight conditions across much of the country, Swett said. The generators that were keeping the lights on at the storm’s peak were 75% dispatchable.

“From where I sit, it’s clear to me that we need more dispatchable generation on a system,” Swett said. “And so not only does that mean not retiring it, but it also probably means building more of it.”

States have the bulk of the authority to get new generation online, with FERC just able to change wholesale market rules that can ensure new projects get fair returns. But the feds have a bigger role when it comes to the main fuel that new dispatchable plants would burn — natural gas.

“So, FERC can help, I think, by permitting more infrastructure as quickly and efficiently and legally durably as possible,” Swett said. “Because if everyone’s saying they need to build gas plants in order to keep our lights on, and our pipelines in many places of the country are constrained or don’t even exist at all, then it seems to me that FERC has to be looking at ways to ensure that we can get the gas to the generation that we need.”

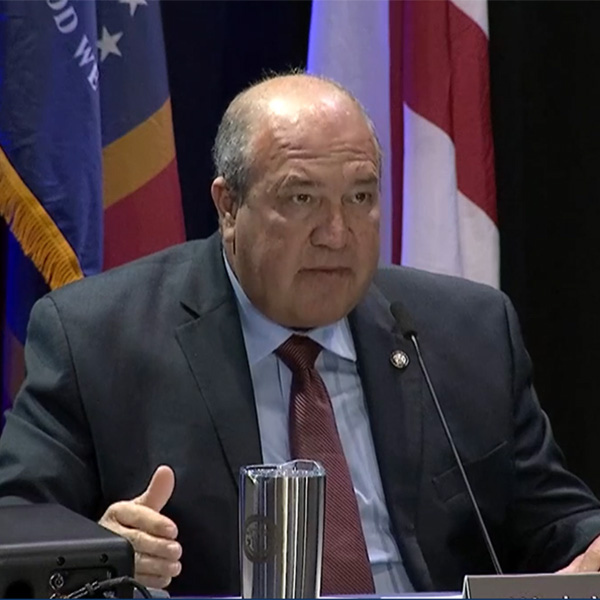

Speaking earlier at the conference, FERC Commissioner David LaCerte said expanding natural gas infrastructure is one of his priorities.

“The economists at FERC could not be more clear: If we add more natural gas capacity to pipelines, that should drive prices down for the ratepayer,” LaCerte said Feb. 9.

LaCerte said that for every question that comes before him at FERC, he considers its impact on reliability and affordability. But with such major issues in front of the commission, the priorities are more difficult to balance.

In addition to the uncertainty around the ANOPR, major changes are being debated in the stakeholder process in PJM and elsewhere. LaCerte told the state regulators to come up with their own proposals.

“That’s probably the ultimate path to success — is telling the folks at FERC, and folks on the Hill, folks on your RTOs, what works best in your own backyards,” LaCerte said. “It’s very hard to create a rule from Washington, D.C., that has durability and longevity across the entire country. It’s almost impossible.”

While connecting large loads presents daunting challenges, the issue comes with opportunities, said Nick Elliot, policy adviser to the White House Energy Dominance Council.

“One of the things that’s inescapable in the utility side is just how much of the total cost structure is fixed and how little is variable,” Elliot said. “And the key message I’ve got there is like scale — selling more megawatts of a fixed cost system leading to better utilization and deflationary overall to prices.”

As long as the costs and risks are properly allocated, he said, demand from data centers and other large loads can help lower rates for other customers.

“Whether it’s a regulated area or a deregulated area, you need to be trying to develop policies where new large loads are accompanied by new large generation, and you grow the system in a balanced way on the capacity side,” Elliot said. “Large loads need to be paying for the transmission associated with the build. And overall, that is deflationary in terms of a better, more effective utilization of the overall system.”

Indiana Michigan Power has a large load tariff, which are becoming more common across the country. Meanwhile, the Northern Indiana Public Service Co. (NIPSCO) has set up a competitive generation subsidiary, or a “GenCo,” to serve large loads, said Indiana Utility Regulatory Commissioner David Veleta.

“The goal of the GenCo structure is ensure that a new customer bears 100% of the cost and risks of new generation that’s built or purchased by the GenCo, and I think that’s like an optimization of what the large load tariffs do,” Veleta said. “The large load tariffs do a good job, but I think that the GenCo model takes it to the next level, and I think that’s just a better step.”

Arizona Public Service has come up with a formula rate that can be updated every year, so consumers do not get bill shocks after several years of increases hitting at once. In its most recent rate case, it asked regulators to approve a 14% increase for most customers and 45% for data centers, said its Senior Vice President Jose Esparza.

“We don’t have a GenCo model, but what we were offering is what we’re calling a subscription rate,” Esparza said. “Whereas you’ll take a portfolio of resources, the customer will have to put up a certain amount of collateral, agree to pay a 20-year agreement or 15-year agreement, to buy down and depreciate those costs as much as possible.”

Those special contracts are reviewed by the Arizona Corporation Commission, which has pushed APS to ensure that “growth is going to pay for growth” with minimum-take requirements and other terms, he added.

The growth of data centers and other large loads comes with a couple types of risk, said Google’s Head of Energy Market Innovation Briana Kobor.

“We have stranded cost risk — the flip side of that being risk of underbuild, right?” Kobor said. “We need to get the right signal in front of our utilities to empower them to do what they do best, which is long-term, least-cost planning for an efficient system. And then we have cost allocation risk. And I think it’s really important for us to separate those concepts as we think about building the new models that we need to enable large loads to come online.”

While new models like what NIPSCO and APS have done are helpful, a much more common trend among state regulators has been what Google calls the “capacity commitment framework.” It has several pillars: broad applicability based on customer size alone, long-term contracts of 10 to 15 years, significant minimum demand charges, and fair and transparent fees for exits so other ratepayers are protected and capacity can be freed up for a more viable large customer.

“It enables the utility to have clarity as to what the build signal is. If they have long-term contracts with minimum revenue guarantees, they are empowered to go and do the next step, which is figure out what we need to build,” Kobor said. “And then once we’ve figured out what we need to build, only then do we know how much it costs and how we should be allocating those revenues across all customer classes.”

Google is committed to paying its fair share of the costs required to serve its growing fleet of data centers, she added. The tech giant is cautious about getting “too creative” and moving away from the basic shared system model that has served the grid well for a century.

“When we start to bifurcate planning into ‘these are the plants that are serving large loads; these are the plants that are serving everybody else’ — I mean, that’s not how the electricity system works. That’s not how electrons flow,” Kobor said. “And, so, I spent my entire career in regulation and rate design, and I know a lot of people are going to be very well employed for a very long period of time figuring out what the right cost allocation methods are.”

The focus on load growth comes as residential customers especially have seen their bills climb faster than inflation in recent years. One narrative is that data centers are the main culprit for higher prices. But their impact on pricing depends on the region.

“Are they putting measures in place to accommodate that load growth, or are they chasing it?” Electricity Customer Alliance Executive Director Jeff Dennis said. “PJM is in a unique set of circumstances that we can talk about, certainly, where they’re in a position where they’re kind of chasing it.”

But if the supply can catch up to demand, then it can lead to lower prices, especially on a system that needs spending to modernize old transmission and distribution infrastructure.

“They, in some ways, are coming at the right time as we’re in this period of increased distribution spending, increased needs to bolster the transmission grid,” Dennis said.